Meet AirTen: the fastest audio real-time runtime period

Jul 24, 2025

/

We’re thrilled to officially launch AirTen - ai-coustics’ purpose-built neural network runtime. Designed especially for real-time audio AI, AirTen delivers unmatched speed, safety, and portability.

And the best part? It’s packed into a runtime smaller than the average photo stored on your phone and exclusively powers the models in our SDK.

What is AirTen?

AirTen (short for AirTensors) is our custom runtime for neural network inference - the critical phase where models move from training to action, executing in real-world applications and devices. In audio AI every millisecond counts and inference speed isn't just a ‘nice-to-have’ - it’s essential.

So we built a new kind of engine. One that has:

A pure no_std Rust runtime

Zero dependencies, ensuring unmatched portability

A build tiny enough for microcontrollers, yet powerful enough for desktop and web

Integration into ai-coustics’ model delivery pipeline and available with our SDK

Why not use existing runtimes?

There are plenty of general purpose inference engines out there, but none optimized for real-time, resource-constrained audio environments. Here’s what we ran into:

They couldn’t guarantee consistent timing - which can lead to pops, clicks, and audio glitches

They used too much memory and processing power, making them hard to run on smaller devices

They were difficult to set up across different platforms

They were missing key features our models rely on

They acted like a black box - hard to understand and even harder to customize

AirTen changes the game by giving you complete control and making no compromises on size, speed, or stability.

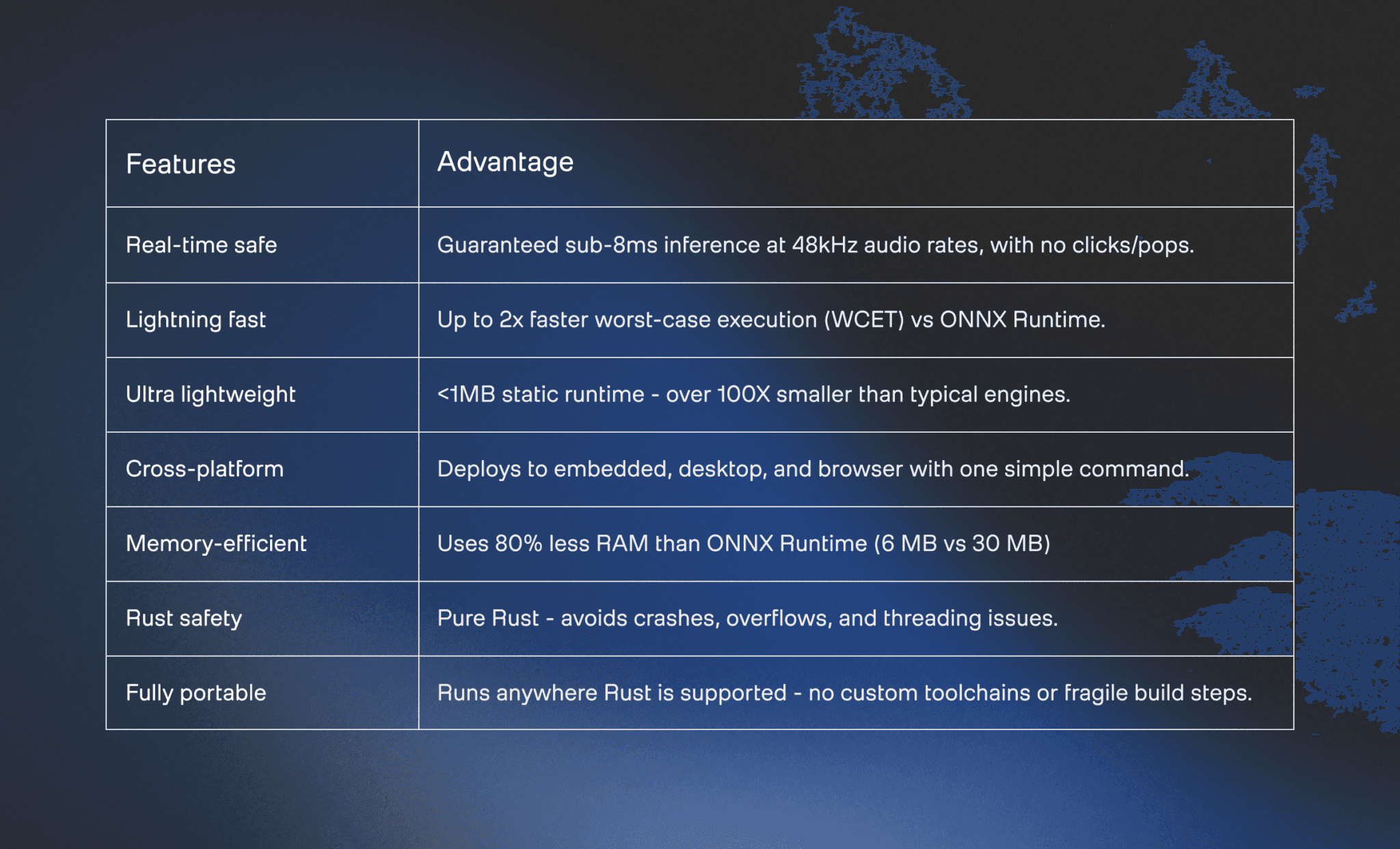

AirTen: Key benefits

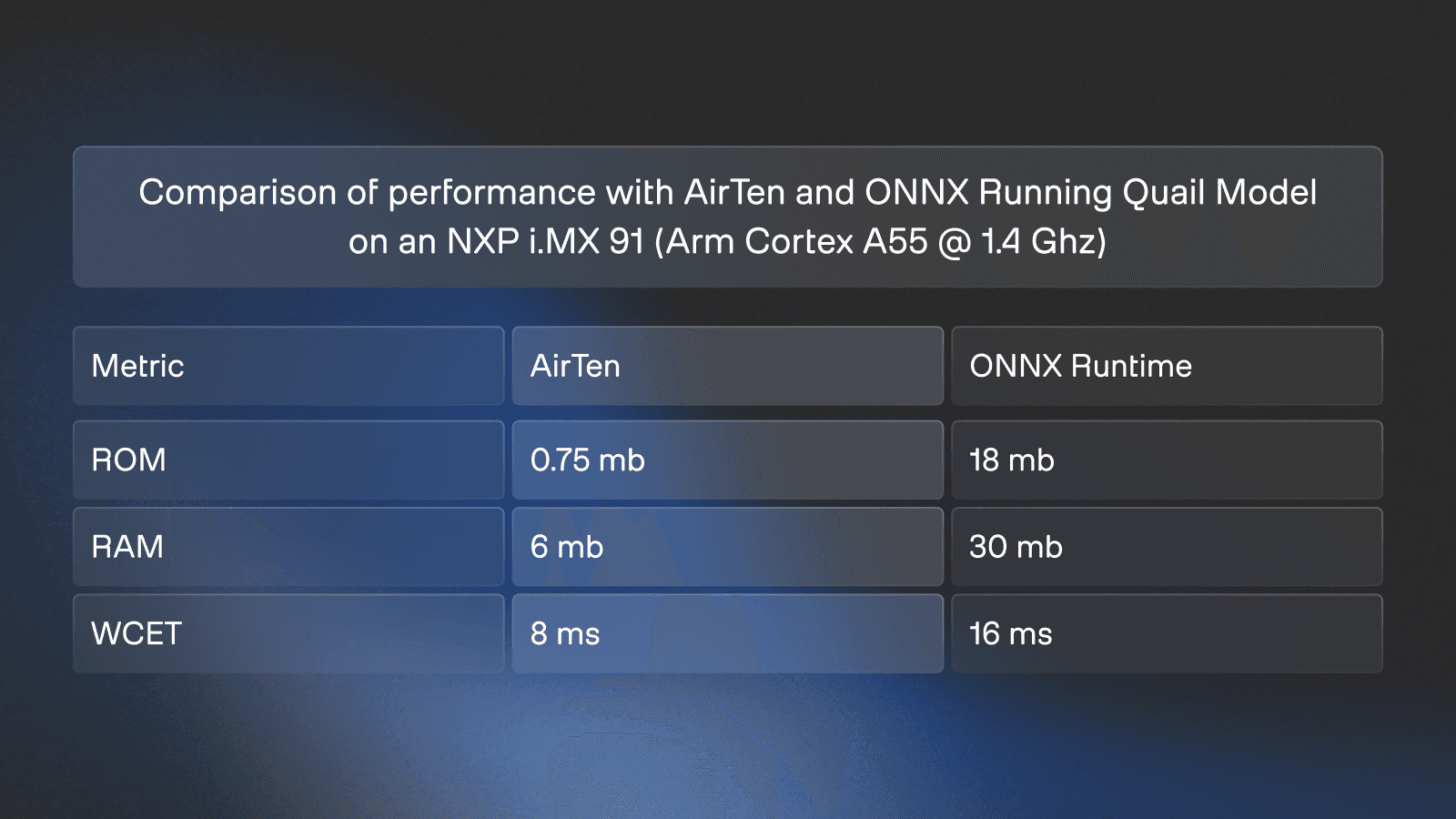

Real-world performance: How AirTen stacks up

We tested AirTen and here’s how it compares to one of the most popular inference engines out there:

In summary:

AirTen is smaller, uses less memory, and runs twice as fast - perfect for devices where every millisecond and megabyte counts.

Keen to try it out?

AirTen is now available with ai-coustics SDK - perect to pair with our real-time model families Quail and Sparrow. Get in touch to learn more.